College of Engineering Unit:

Our project partner is working on research for robotic manipulation and the benchmarking of grasping tasks. To accomplish this, vast amounts of real-world data need to be collected. Performing these experiments requires the robotic arm and camera to be calibrated to the environment. Prior to approaching the CS Capstone program, our partner was relying on unrefined command-line tools to achieve workspace calibration. Visualization and simulation were done separately from the calibration process without a unified system. With more research being done, our project partner wished to partner with us to create a more fleshed-out software system to accomplish these tasks.

Our Calibration Process

-

Launch GUI calibration interface and select desired settings.

-

Calibrate the main camera using the GUI walkthrough, using OpenCV and ROS interface to find camera parameters.

-

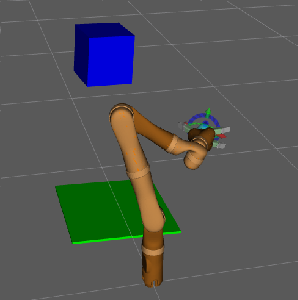

Drive robot arm to touchpoint locations and record joint angles using the GUI. (Location of the arm is calculated using IK and ROS relative to the table frame).

-

Choose to save calibration information or visualize the calibrated system in Rviz.