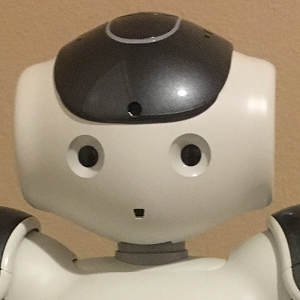

Our project involves working with a robotic-standup comedian to improve its ability to detect and analyze responses from its audience to the jokes it tells. Based on the amount and duration of detected laughter it rates the joke as either a success, failure, or in between. After a successful joke it can decide to make a follow-up remark acknowledging the audience's approval. After a failed joke it can make a remark about the joke not connecting. Having these extra tags to the jokes which show an understanding of the interpersonal communication being built between the comedian and the audience improves the experience of the human-robot interaction and results in a more successful performance than if the robot merely moved on from one joke to the next for its entire routine.

The specific tasks our group were given were to improve the performance of the robot's decision making when analyzing post-joke laughter, and to introduce new functionality that could analyze mid-joke laughter as well. To do this we used a dataset of recordings from previous comedy performances that the robot had given, split them into shorter clips that only contained the mid-joke and post-joke audio respectively, and extracted certain audio features such as pitch and intensity from each recording. We then designed a classifier to perform machine learning on the data, using human ratings of the audio clips to identify what values from the audio features indicate laughter at a successful joke or muted reaction from a failed one.

We created two classifiers, one for analyzing the post-joke audio and one for the mid-joke audio, with the goal that when used together the robot's final prediction of whether a specific joke was a success or not, based on its analysis of the audience's reaction, would be as accurate as a human rater tends to be when rating the jokes. The post-joke classifier uses the same three point scale originally described (strong laugher from the audience, minimal or no laughter, or something in between) while the mid-joke classifier uses a simpler two class rating (laughter present or no laughter) and doesn't attempt to rate the quality of the laughter. This is because when analyzing the mid-joke audio there is the added challenge of ignoring the sound of the robot telling the joke, so it is better to simply detect whether laughter of any amount occurred or not rather than attempt to further classify it.