We aim with this project to translate American Sign Language (ASL) in real time using an Intel RealSense depth camera.

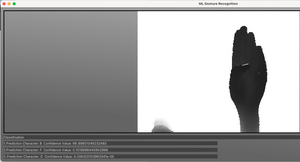

Many people have heard of the newly developing field of neural networks. This subject has become the main attraction in recent years due to how much it can be applied to complex issues, such as image recognition. Using the new advancements in recognition software and utilizing a camera that senses depth, we can tackle some ideas that were thought to be far too abstract for a computer to understand. The goal of this project is to automate the process of translating ASL in real time, along with demonstrating different Intel technologies utilizing neural networks. Having a device that recognizes the different gestures will help assist people who use sign language to communicate with those who do not. Through use of a SR305 RealSense camera to capture depth based images, a majority of the tertiary information can be removed and a preprocessed image can be displayed onto a GUI. Different networks are used to classify these images, which can be selected using the user interface, allowing for a diverse range of different topologies. The network selected will then parse a video feed and classify the different gestures, even ones which include movement, in real time.