College of Engineering Unit(s):

Electrical Engineering and Computer Science

Team:

Claire Hekkala

Project Description:

My research is aimed at improving the decision-making capabilities in autonomous vehicles to make them safer and more reliable. To this end, this project seeks to understand how neural networks make decisions by extracting temporal logic formulas from time series data using machine learning. In other words, it focuses on building a machine learning model that is able to describe its own decision-making process when given data that changes over time. It does this by creating a formula that contains logical operators such as AND, OR, NOT, EVENTUALLY, and ALWAYS.

In other words, this research explores how machines make decisions. My goal is to make a machine learning model output math that describes how it makes choices. If this logic is used in a self-driving car, it will make the self-driving car safer because experts will be able to check and make sure the car is making choices based on the correct information.

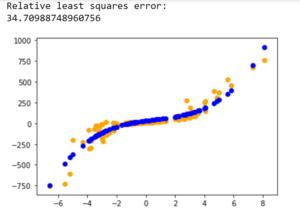

Two strategies were followed during the development of this research: first, a generative adversarial network was combined with already-existing temporal logic extraction code by Belta. This was achieved by injecting temporal logic formula-generating code into the discriminator within the generative adversarial network. This approach proved unfeasible due to the difficulty of moving data back and forth between TensorFlow and MatLAB, so the next approach attempted was using recurrent neural networks to create custom layers where each layer represented an atom or a temporal logic operation. The layers' weights were then trained to decide which atoms and operators produced the most accurate formula; this was done by comparing the RNN outputs with formula robustness values calculated by Breach.

The original intended significance of this research was in allowing autonomous vehicles to describe their decision-making processes via formulas that can be checked by experts. The goal is to avoid the "black box" effect currently in place, in which the results of a network's decision-making process can be checked for quality, but the method of making decisions is not easily parsed. Being able to understand the reasons behind choices a network is making, in the case of autonomous vehicles, would add greatly to such vehicles' safety and make them more viable for general use. The values used for training data during this project were simulated distance signals from unmanned aerial vehicles over time, but this concept could be expanded to cover the full complexity of an autonomous vehicle's produced signals, such as position, velocity, and acceleration, in order to more effectively leverage the research enclosed here.

My research advisor is Houssam Abbas, and I also worked with Nicole Fronda on the code!